Lidt om alt og meget om GIS - A little about everything and a lot about GIS.

torsdag den 31. juli 2008

We knew the web was big...

Google is gigantic or rather the Internet is gigantic - Gigantic with a capital G. And then again however, it is merely a reflection of what we are as humans, as individuals, as groups etc. With the Internet and Google (and others alike) we have for the first time in history access to large resources of data and information and a lot more. This gives us a solid foundation on which to act and draw important decisions - we get more informed and on this basis creates new knowledge and new information. Google is big, but it will never cease to grow.

/Sik

We knew the web was big...

We've known it for a long time: the web is big. The first Google index in 1998 already had 26 million pages, and by 2000 the Google index reached the one billion mark. Over the last eight years, we've seen a lot of big numbers about how much content is really out there. Recently, even our search engineers stopped in awe about just how big the web is these days -- when our systems that process links on the web to find new content hit a milestone: 1 trillion (as in 1,000,000,000,000) unique URLs on the web at once!

How do we find all those pages? We start at a set of well-connected initial pages and follow each of their links to new pages. Then we follow the links on those new pages to even more pages and so on, until we have a huge list of links. In fact, we found even more than 1 trillion individual links, but not all of them lead to unique web pages. Many pages have multiple URLs with exactly the same content or URLs that are auto-generated copies of each other. Even after removing those exact duplicates, we saw a trillion unique URLs, and the number of individual web pages out there is growing by several billion pages per day.

So how many unique pages does the web really contain? We don't know; we don't have time to look at them all! :-) Strictly speaking, the number of pages out there is infinite -- for example, web calendars may have a "next day" link, and we could follow that link forever, each time finding a "new" page. We're not doing that, obviously, since there would be little benefit to you. But this example shows that the size of the web really depends on your definition of what's a useful page, and there is no exact answer.

We don't index every one of those trillion pages -- many of them are similar to each other, or represent auto-generated content similar to the calendar example that isn't very useful to searchers. But we're proud to have the most comprehensive index of any search engine, and our goal always has been to index all the world's data.

To keep up with this volume of information, our systems have come a long way since the first set of web data Google processed to answer queries. Back then, we did everything in batches: one workstation could compute the PageRank graph on 26 million pages in a couple of hours, and that set of pages would be used as Google's index for a fixed period of time. Today, Google downloads the web continuously, collecting updated page information and re-processing the entire web-link graph several times per day. This graph of one trillion URLs is similar to a map made up of one trillion intersections. So multiple times every day, we do the computational equivalent of fully exploring every intersection of every road in the United States. Except it'd be a map about 50,000 times as big as the U.S., with 50,000 times as many roads and intersections.

As you can see, our distributed infrastructure allows applications to efficiently traverse a link graph with many trillions of connections, or quickly sort petabytes of data, just to prepare to answer the most important question: your next Google search.

Posted by Jesse Alpert & Nissan Hajaj, Software Engineers, Web Search Infrastructure Team

Source: http://googleblog.blogspot.com/2008/07/we-knew-web-was-big.html

ArcIMS 9.3 Java Custom and Java Standard Viewers, ColdFusion Connector, and Platform Deprecation Notice

Så er det officielt ...

It's official!

/Sik

To: Users of ArcIMS

Subject: Important Notification—ArcIMS 9.3 Java Custom and Java Standard Viewers, ColdFusion Connector, and Platform Deprecation Notice

ESRI has always been committed to providing our customers with the highest level of support and service. However, over time, some components of mature ESRI software must be marked for deprecation. Deprecation means that a particular feature is included in the product but that the support for this feature is now at the mature support level:

- No patches or hot fixes

- No new functionality

- No certification

- Phone support

- E-mail/Fax support

- Online Support Center

At 9.2, ESRI announced the deprecation of these ArcIMS components:

- ArcSDE connection administration in the Service Administrator (not the entire Service Administrator)

- Java Viewer API for use with the Java Custom Viewer

ActiveX Connector

- The Java Custom and Java Standard Viewers (Users should consider using the Web

- Mapping Application that is part of the .NET and Java Web Application Developer

- Framework [ADF]. Note: The HTML Viewer has not been deprecated.)

ColdFusion Connector

This means that the Java Custom and Java Standard Viewers and the ColdFusion Connector will not be included with the next release after 9.3.

In addition to these two components, 9.3 will be the last ArcIMS release to support the HP-UX PA-RISC and Windows 2000 Server operating systems.

We recognize the investment our customers have made in ArcIMS software, related hardware, and staff training, so we want to assure you that we will continue to support the core ArcIMS components such as the Spatial Server and the Metadata Server as well as any other component not listed above.

If you have any questions, please contact your local ESRI regional office manager or international distributor.

Sincerely,

Dirk Gorter

Director of Product Management

ESRI

MediaSet, Silvio Berlusconi, Sue YouTube For $800 Million

That man is in sane!

/Sik

MediaSet, Silvio Berlusconi, Sue YouTube For $800 Million

Michael Learmonth July 30, 2008 1:35 PM

Google and YouTube just found themselves in more legal/political hot water -- in Italy. MediaSet, the dominant TV provider in Italy controlled by Prime Minister Silvio Berlusconi, sued Google (GOOG) and YouTube in a Rome court, seeking "at least" $779 million in damages.

The Milan-based company found 4,643 videos from MediaSet companies on YouTube on June 10, representing 325 hours of broadcasting, the company said. Based on the number of hits generated by the clips, MediaSet claims it lost the equivalent of 315,672 broadcasting days.

Of course, most of that online viewing would have been lost to TV anyway since it occurred after the initial broadcast of the video. Still, it looks like YouTube isn't having much better luck screening copyrighted video from Italy than it is clips of "The Daily Show."

But it's a good reminder that Viacom isn't the only one suing Google. Other parties suing the firm include the English Premier League, The Scottish Premier League, The Tennis Federation of France, Cherry Lane Music, Murbo Music Publishing, France's TF1, as well as another MediaSet company, Spain's Telecinco.

Meanwhile, Viacom and Google are negotiating the transfer of YouTube logging data this week, which Viacom hopes to use to establish just how many of its clips made it on the system -- to support its claim for $1 billion in damages.

Source: http://www.alleyinsider.com/2008/7/mediaset-silvio-berlusconi-sue-youtube-for-800-million

Microsoft VE to be in ESRI's ArcGIS Online

Still interessting news.

/Sik

Microsoft VE to be in ESRI's ArcGIS Online

The press release came out yesterday and was picked up by a number of blogs, though few had any immediate comment. I'm in the same boat. It crossed my desk and I didn't get an immediate sense of a world changing event.That said:

- ESRI rarely announces anything new before the User Conference.

- Delivery of Virtual Earth content via ArcGIS Online was hinted at in the ESRI UC Q & A (including pricing: $200/year per ArcGIS seat).

- ESRI has yet to officially launch ArcGIS Online; that's coming next month.

- ESRI's "Google" announcement at Where was rather "hands off," that is required seemingly little partnering. This is different from a business standpoint since I have to believe Microsoft will receive some money from each subscription for its data.

Source: http://apb.directionsmag.com/archives/4576-Microsoft-VE-to-be-in-ESRIs-ArcGIS-Online.html

Explorer at the User Conference

4 days ...

/Sik

We've been readying for the 2008 User Conference, and we're looking forward to meeting existing and soon-to-be Explorer users in San Diego. Please stop by and visit us in the Showcase area. We'll be happy to answer your questions, and show you some of the interesting and powerful things you can do with Explorer 480. We'll also be giving a preview of the upcoming Explorer 600 release. And of course we're there to listen to and gather your comments and suggestions as we move forward with our development plans.

There's a handy Agenda Search tool on the ESRI Web site. Just type in "ArcGIS Explorer" to get a list of sessions to consider attending. Here's the results of that search, and we've highlighted some of the sessions that we recommend to help you plan your conference. Just follow the Explorer globe. See you there!

Read more: http://blogs.esri.com/Info/blogs/arcgisexplorerblog/archive/2008/07/30/explorer-at-the-user-conference.aspx

onsdag den 30. juli 2008

The unexpected surprise...

Lyder næsten for godt til at være sandt. Vores IMS er meget snart historie så lad os krydse fingre for at fremtiden ser mere lys ud ...

Is there light at the end of the tunnel or is just another train coming your way?

Sounds too good to be true. Our IMS is soon to be history - crossing fingers that we are heading for a brighter future ...

/Sik

The unexpected surprise...

Posted At : 12:09 PM Posted By : JasonRelated Categories: ArcGIS JS API, ArcGIS General

I hadn't counted on this.

For you ArcIMS veterans, you know that it can be very, er, temperamental at times. If you are responsible for a high volume site, you dont just know it, you live it. You've written scripts to start and stop it. You've written scripts to ping your mapserver. You can refresh your services via the cmd line. You have full logging on more than you'd like too. Heck we have a utility that we wrote to send us a text message if a service comes back as unresponsive.

I really wasnt looking forward to the management of a production ArcGIS server. We have been using it since its inception at version 9, and have always found it to be temperamental as well.

However, with the advent of the REST api (plus its Javascript wrapper), and the extensive use of tile caching, we have found an most unexpected benefit. ArcGIS Server, when utilizing REST & JS, is very easy to manage. No more corrupted sez files. No more runaway aimserver.exe's. Strange. I'm not used to it yet.

Of course, there are some obvious reasons for this - which I would say is mainly due to heavy use of caches and client side code. Granted, these apps are simple in comparison (lets say vs a heavy duty ArcIMS site). My hope is that as these new API's continue to evolve and our apps increase in complexity, that the management of the server remains to be straightforward as it is now.

Source: http://www.roktech.net/devblog/index.cfm/2008/7/8/The-unexpected-surprise

Near Real-Time Aerial Imagery

A photo a day? A bit scary though ...

/Sik

The Ogle Earth blog has a small blurb from an ITWire article. Here is the blurb from Ogle Earth, quoting the ITWire article : "According to [Perth-based] Ipernica, "NearMap's technology enables very high resolution aerial photomaps with multiple angle views to be created at a fraction of the cost of traditional solutions... For the first time, people will be able to see the environment change over time, as NearMap's online photomaps allow users to move back and forward month by month to see changes occur, such as the construction of a home or development of a new road. [And] with NearMap's revolutionary approach to high resolution photomaps, it has achieved its objective of a 20-fold operating cost reduction over current industry practices."

Source: http://technology.slashgeo.org/technology/08/07/24/1610222.shtml

Power to the print

Looks nice but I don't need all those street view images ...

/Sik

Power to the print

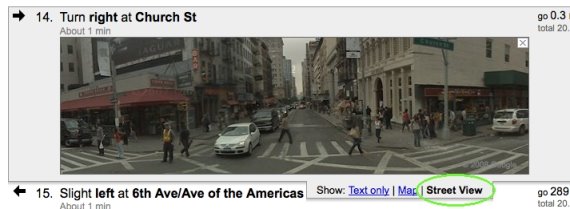

Wednesday, July 30, 2008 at 11:10 AM Google Maps can help get you where you want to go, but let's face it, you can't be online all the time. Sometimes you really just need a good old-fashioned hard copy, so we're making it easier to print exactly the information you want: text directions, maps for any step, and - for the first time - Street View images.

Primer: GeoJSON

GeoJSON ... that was a new one. One might look into it ...

/Sik

By Adena Schutzberg , Directions Magazine July 30, 2008

Unless you are a developer, you may not have run into JSON or its "partner in crime" for geo, GeoJSON. Directions Magazine covered it as emerging technology last year.The format has "made the big time" in some sense, as the final specification is complete and Safe Software announced full support for it. This "for non-programmers" short essay will answer these key questions: What is it? Why is it special?

JSON stands for JavaScript Object Notation and, per the JSON wiki (currently unavailable, but see the JSON page instead), is a lightweight data-interchange format. It is text-based, meaning humans and machines can easily read it. The format includes just simple pairs; the first term in a pair is the "name" and the second is the "value." Here's what it looks like if you store an address book entry in JSON:

{

"addressbook":

{

{

"name": "Mary Lebow","address":

{

"street": "5 Main Street"

"city": "San Diego, CA",

"zip": 91912,

},

"phoneNumbers": ["619 332-3452","664 223-4667"]

}

}

One of the things that distinguishes this encoding from XML (eXtensible Markup Language) is the characters used to separate the data. In JSON you see [ and } and ,. In XML you'd see <> and /. Big deal? Yes, says Daniel Rubio in his Introduction to JSON: "As it turns out, the internal representation used by JavaScript engines for data structures like strings, arrays, and objects are precisely these same characters." So, this JSON encoding is better tailored for JavaScript. That said, the format, like most data formats, is language independent. You can use any language you like to write, read or process the data.

Now, the "geo" part. GeoJSON is a project (wiki) to explore putting geographic data into JSON. The outcome is a recently released 1.0 specification. It documents the details of storing geographic features. In short, one of the "names" in the "name/value" pairs noted above must be "type." GeoJSON supports these types: "Point," "MultiPoint," "LineString," "MultiLineString," "Polygon," "MultiPolygon," "Box," "GeometryCollection," "Feature" or "FeatureCollection." And, as you can imagine, there are some rules about how the geometry details of each type are stored. Optionally, GeoJSON objects can have a "name" called "crs" which defines the coordinate system. If there is no "crs," the data are assumed to be in the WGS 84 datum in decimal degrees. So, a point looks like this:

{

"type": "Point",

"coordinates": [100.0, 0.0]

}

You can add properties (that is, other name/value pairs) to create features. And, of course, features can include other objects and features. Thus, you can build some very complex geometries with quite a few properties, as needed. To get a sense of what these files look like in contrast to other formats, try out this OpenLayers tool. You can draw geometry on the map and see its encoding in GeoJSON, KML and other vector formats.

The yellow box in the figure is encoded this way in GeoJSON by the OpenLayers demo.

{"type":"Feature", "id":"OpenLayers.Feature.Vector_124", "properties":{}, "geometry":{"type":"Polygon", "coordinates":[[[-1.40625, 24.2578125], [-4.21875, 7.3828125], [42.890625, 3.8671875], [29.53125, 29.1796875], [10.546875, 25.6640625], [-1.40625, 24.2578125]]]}, "crs":{"type":"OGC", "properties":{"urn":"urn:ogc:def:crs:OGC:1.3:CRS84"}}}

The GeoJSON wiki lists some 20 implementations in code libraries, desktop and Web apps. There are only a few commercial players supporting GeoJSON at this point, but that may change in the coming months.

Source: http://www.directionsmag.com/article.php?article_id=2827&trv=1

iPhone Users Lead Mobile Maps Usage in the US

It might be that the iPhone it the one too cool device right now and it might be the one and only device for a while. It usually don't take long before other cheaper alternatives show up and some might be just as good. It doesn't however change the fact that I just gotta have one ...

/Sik

“The mobile phone as a personal navigation device makes tremendous sense,” observed Mark Donovan, senior analyst at comScore.

It surely won`t be long before Apple`s iPhone line up is the leading device for mobile maps usage. Just you wait and see.

Yahoo Local API Now Lets You Search Along Routes

Next up is a search for other people along the route.

/Sik

Earlier this week Yahoo announced the release of a new version of its Yahoo Local Search API. This new release adds some interesting functionality: it allows developers to issue queries to the API based on a route.

According to Yahoo:

We are releasing a new version of the Yahoo! Local API (V3), that gives developers a key new feature – the ability to search for convenient points of interest for a given user defined route. For example, as you are driving from San Francisco to Sacramento, you could search for a Starbucks or for a hardware store.

The RESTful API now includes a “route” parameter that is a series of latitude/longitude coordinates, with the first pair of coordinates as the starting point and the last pair of coordinates as the ending point. A string sent as the route paramater would be structured as follows:

The API returns XML, JSON, or serialized PHP. If you request the response in JSON, you can include a callback parameter to a JavaScript function. I put together the example below to demonstrate how the new Local Search API can be used in conjunction with Yahoo! Maps to display pizza restaurants along a route in Portland, Oregon (the example below requests data in JSON format and includes a callback parameter request).

Our Yahoo! Local Search API profile includes additional information on the API and lists several mashups that use this API, including Ski Bonk and Boston T Subway Map. Additional documentation on the API is available on the Yahoo Developer Network.

We’re excited to see some route-based mashups emerge in the near future.

Source: http://blog.programmableweb.com/2008/07/30/yahoo-local-api-now-lets-you-search-along-routes/

NASA launches online historical image gallery

Brian Fonseca

July 29, 2008 (Computerworld) NASA last week launched a new interactive Web site, jointly developed with the non-profit Internet Archive, which initially combines some 21 separately stored and managed NASA imagery collections into a single online resource featuring enhanced search, visual and metadata capabilities.

The new portal, located here, stores more than 140,000 digitized high-resolution NASA photographs, audio and film clips. The launch of the site marks the end of the first phase of a five-year joint NASA-Internet Archive effort to ultimately make millions of NASA's historic image collection accessible online to the public and to researchers, noted Debbie Rivera, manager of strategic alliance at NASA.

The first content available on NASA's imagery Web site includes photos and video of the early Apollo moon missions, views of the solar system from the Hubble Space Telescope and photos and videos showing the evolution of spacecraft and in-flight designs.

The five-year joint development agreement signed in 2007 will also lead to the embedding of Web 2.0 tools into the site. For example, engineers are developing Wikis and blogs for users to share content. The team has already started adding metatags to improve search results, Rivera said. "There's a lot more to come," she added. "This is only the beginning."

In about a year, the partnership will tackle the enormous task of on-site digitizing of still images, films, film negatives and audio content currently stored on analog media devices across NASA field centers, Rivera said. Speed is essential, she noted, as some of NASA's older analog recordings and film footage of events as far back as 1915 are "disintegrating. This is one of the largest aspects of this partnership," she admitted.

Internet Archive, founded in 1996 to create an Internet-based library, will manage and host NASA's new interactive image gallery on the cluster of 2,000 Linux servers at its San Francisco headquarters, said John Hornstein, director of the NASA images project for the group. The non-profit currently runs 2 petabytes of storage, Hornstein said.

Hornstein acknowledged some hiccups following the launch of the site last week when servers crashed causing intermittently sluggish response times. In addition, software around the zoom-in functionality of thumbnail images on the NASA web site is still being de-bugged. He downplayed any lingering effects, however. "We're just finding where the issues are and we don't see any of this as an ongoing problem," remarked Hornstein.

Internet Archive is using software donated by Luna Imaging Inc. to help develop and support the NASA images project.

Source: http://www.computerworld.com/action/article.do?command=printArticleBasic&articleId=9110976

Import/Export Google SketchUp to trueSpace

It is all connected no matter how you look at it ... We only have One globe

/Sik

July 29th, 2008 · No Comments · GIS

I blogged about my concern that Microsoft wasn’t supporting SketchUp files in trueSpace last week and I said I’d report back on what I learned.

As long as you have Google SketchUp Pro, the process is really easy. I opened a model in Google SketchUp Pro and exported as a 3ds Max file. But that was the least of my worries. First off, don’t be fooled by Microsoft owning trueSpace though. This product obviously has had zero input from the Microsoft team. The install program wants to install the thing at “C:\trueSpace76″, not putting it in the logical Program Folders place. Second, how many file menus does a program need? Trying to just find a place to import the 3D Studio Max file was difficult. Eventually I realized I wanted the other File menu (the left file menu, not the right file menu).

Read on: http://www.spatiallyadjusted.com/2008/07/29/importexport-google-sketchup-to-truespace/

Microsoft prepares for end of Windows with Midori

It's gonna take som time but windows might end up as 'webdows' one day. Google look out.

/Sik

Midori is a componentized, non-Windows OS that will take advantage of technologies developed since the advent of Windows and likely will be Internet-based

By Elizabeth Montalbano, IDG News ServiceJuly 29, 2008

With the Internet increasingly taking on the role of the PC operating system and the growing prevalence of virtualization technologies, there will be a day when the Microsoft Windows client OS as it's been developed for the past 20-odd years becomes obsolete.

Microsoft seems to be preparing for that day with an incubation project code-named Midori, which seeks to create a componentized, non-Windows OS that will take advantage of technologies not available when Windows first was conceived, according to published reports.

Although Microsoft won't comment publicly on what Midori is, the company has confirmed that it exists. Several reports -- the most comprehensive to date published on Tuesday by Software Development Times -- have gone much further than that.

That report paints Midori as an Internet-centric OS, based on the idea of connected systems, that largely eliminates the dependencies between local applications and the hardware they run on that exist with a typical OS today.

The report claims Midori is an offshoot of Microsoft Research's Singularity OS, which creates "software-isolated processes" to reduce the dependencies between individual applications, and between the applications and the OS itself.

With the ability today to run an OS, applications -- and even an entire PC desktop of applications -- in a virtual container using a hypervisor, the need to have the OS and applications installed natively on a PC is becoming less and less, said Brian Madden, an independent technology analyst.

"Why do you need it?" he said. "Now we have hypervisors everywhere."

Madden suggested that a future OS could actually be a hypervisor itself, with virtual containers of applications running on top of it that can be transferred easily to other devices because they don't have client-side dependencies to each other.

And while he has no information about Midori beyond the published reports, he said descriptions of it as an Internet-centric system that provides an overall "connectedness" between applications and devices makes sense for the future of cloud computing and on-demand services. Microsoft likely recognizes the need for this even if the actual technology is still five or more years out, Madden said.

"They're preparing for the day when people realize we don't need Windows anymore" and thinking about what they will do to remain relevant, he said.

Indeed, Microsoft has been emphasizing its virtualization strategy, based on its new Hyper-V hypervisor, beyond merely virtualizing the server OS. The company also is moving full steam ahead with plans to virtualize applications and the desktop OS as well.

Using virtualization in these scenarios would eliminate the problems with application compatibility that are still giving headaches to Vista users, and that have made the OS a liability rather than a boon for some Windows power users and enterprise customers.

If Midori is close to what people think it is, it will represent a "major paradigm shift" for Windows users and be no easy task for Microsoft to pull off, said Andrew Brust, chief of new technology for the consulting firm Twentysix New York.

He said challenges to an OS like Midori would be both technological complexities and the "sobering compromises" that must be made when a product moves from being a research project into commercialization. "I would expect those in abundance with something of this scope and import," Brust said.

Though he has not been briefed by Microsoft on Midori, Brust said the idea makes sense because Microsoft needs to drastically update Windows to stay current with new business models and computing paradigms that exist today -- particularly to help the company compete against Google on the Web.

"Breaking with the legacy of a product that first shipped 23 years ago seems wholly necessary in terms of keeping the product manageable and in sync with computing’s state of the art," Brust said. "If Midori isn’t real, then I imagine something of this nature still must be in the works. It’s absolutely as necessary, if not more so, to Microsoft's survival as their initiatives around Internet advertising, search and cloud computing offerings."

Source:

tirsdag den 29. juli 2008

Google Map - New Look

Google has redesigned the look of Google Map. A more clean look - unfortunately did I experience some unintentionally map tile selects (making the image tiles blur). It is rare to see Google make such obivious bugs but they will probably soon solve the problem. Another (not new) feature is the overlay of geo tagged photos in the current map extent. It does look great.

/Sik

Source: http://map.google.com/

Microsoft Virtual Earth to Be Offered With ESRI’s ArcGIS Online Services

ESRI and Microsoft in close cooporation comes in handy a few dasy before UC 2008.

/Sik

Microsoft Virtual Earth to Be Offered With ESRI’s ArcGIS Online Services

REDMOND, Wash., and REDLANDS, Calif. — July 29, 2008 — Microsoft Corp. and ESRI today announced that ESRI ArcGIS users will have access to the robust mapping and imagery content provided by the Microsoft Virtual Earth platform inside ArcGIS Desktop and ArcGIS Server. With Virtual Earth seamlessly integrated into ArcGIS 9.3 Desktop, ArcGIS users are now able to easily add base maps, which feature rich aerial and hybrid views, to perform data creation, editing, analysis, authoring and map publishing with one-click access.

“Microsoft and ESRI have a long-standing history of delivering complete geographic information systems solutions,” said Erik Jorgenson, corporate vice president at Microsoft Corp. “The integration of Virtual Earth and ArcGIS 9.3 at both desktop application and platform levels enables customers to better view, analyze, understand, interpret and visualize geographic data. This new offering further extends the software-plus-services choices available to our mutual customers.”

“With the new ArcGIS Online Virtual Earth map services, ArcGIS Desktop and ArcGIS Explorer users will have nearly instant access to some of the world’s finest base map layers,” said S.J. Camarata, Director of Corporate Strategies at ESRI. “Through this collaboration with Microsoft, street maps, vertical imagery and hybrid maps will be available on an affordable, annual subscription fee basis.”

Together, Virtual Earth and ArcGIS Online services will enable customers to access resources that add comprehensive depth to their projects. The seamless integration of Virtual Earth throughout the entire ArcGIS system makes it easier for ArcGIS customers to offer their end users better insight into their services, which can inspire deeper involvement and participation with their valuable GIS data.

The ArcGIS Online Virtual Earth map services will include high-resolution street maps, imagery and hybrid maps. Street map coverage is available for more than 60 countries and regions including North America, Europe, South America, the Asia Pacific region and Northern Africa. Aerial and satellite imagery includes worldwide coverage but varies by region. ESRI integrates with other Microsoft products that may be part of a user’s Virtual Earth solution to incorporate internal and third-party data—making information more discoverable, more visual, and better to take action upon.

Land planning, site selection, crime analysis, road network analysis and delivery network management are just a few examples of how ArcGIS desktop users, including ArcGIS Explorer users, can leverage ArcGIS Online Virtual Earth map services. ArcGIS Server users will be able to connect to ArcGIS Online Virtual Earth Map Services at a later date, through a service pack.

Users can preview Virtual Earth street maps, imagery and hybrid map layers at http://resources.esri.com/arcgisonlineservices. To learn more about ArcGIS Online, visit www.esri.com/arcgisonline.

Source: http://www.microsoft.com/presspass/press/2008/jul08/07-29ESRIPR.mspx?rss_fdn=Press%20Releases

HotelMapSearch.com Launches Industry's First Hotel Price Map

Search hotels world wide.

/Sik

Source: http://www.hotelmapsearch.com

New Virtual Earth ASP.NET Control Released

Hey everyone, Angus Logan here. I’m the technical product manager across all of the Live Platform APIs.

Today we’re refreshing the Windows Live Tools for Microsoft Visual Studio (CTP). Over the past few months we’ve fixed lots of bugs and made sure the controls are up to date… but what I’m super excited about is the addition of the Microsoft Virtual Earth ASP.NET Control.

The Windows Live™ Tools for Microsoft® Visual Studio® 2008 are a set of control add-ins to make incorporating Windows Live services into your Web application easier with Visual Studio 2008 and Visual Web Developer 2008.

You can download the controls from here and we would love your feedback.

We now 6 controls you can easily drag into your ASP.NET web applications:

- Map Control *new!* (reference) - link coming shortly

- Contacts Control (reference)

- IDLogin Control (reference)

- IDLoginView Control (reference)

- MessengerChat Control (reference)

- SilverlightStreamingMedia Control (reference)

Check out this video on Channel 9 where Mark Brown and I demo the control

http://channel9.msdn.com/posts/Mark+Brown/417721/player/

Read on: http://dev.live.com/blogs/devlive/archive/2008/07/27/386.aspx

Iværksættere vil udkonkurrere Google

Se også: http://www.webpronews.com/topnews/2008/07/28/cuil-crashes-and-burns-at-launch

/Sik

Iværksættere vil udkonkurrere Google

En ny slags søgemaskine skal ændre måden vi søger på, og prioritere kontekst højere. Bagmændene kommer selv fra Google, og har klare ideer til hvordan søgning skal være bedre.

Man skulle ikke tro det kunne lade sig gøre, men den nye søgemaskine Cuil påstår at have over 120 milliarder hjemmesider i indekset. Mange vil måske mene, at der bare er tale om overdrivelse og PR-stunt, men folkene bag er ikke hvem som helst i branchen.

I 2004 solgte Anne Patterson sin søgeteknologi, kaldet Recall, til Google, og arbejdede efterfølgende hos Google. Men hun var ikke helt tilfreds med firmaets filosofi og opførsel, og derfor forlod hun foretagendet i 2006, for at starte forfra og lave sin egen søgemaskine, Cuil.

Tunge drenge

Folkene bag Cuil, som udtales "cool," er ikke hvem som helst indenfor søgemaskine-teknologi. Sammen med Anne Pattersons mand, Tom Costello, som udviklede søgemaskinen Xift i 1990'erne, og senere en analyse-motor for IBM, består holdet af to andre eks-Google medarbejdere, Russel Power og Louis Monier.

Mens Russel Power var en af Anne Pattersons kolleger hos Google, har Louis Monier dybdegående erfaring fra flere firmaer. Før Google var mere end bare et idealistisk garageprojekt, var Louis Monier CTO for søgemaskinen AltaVista. Senere var Louis Monier med til at udvikle Ebays søgeteknologi.

Ideen med Cuil er at fokusere på indholdet af hjemmesiderne, og levere kontekst-relevante nøgleord og forslag, efterhånden som man søger.

Mere layout-orienteret

Søgeresultaterne vises i et layout, som nærmere ligner et magasin, end klassiske søgeresultater. Blandt andet er resultaterne gerne suppleret med et billede, og der præsenteres kategorier på flere forskellige måder.

"Vores hold har en anden tilgang til søgning. Ved at bruge vores ekspertise indenfor søgearkitektur og relevans-metoder, har vi bygget en mere effektiv søgemaskine på fra bunden. Internettet er vokset, og vi mener at det er på tide, at søgning følger med," udtaler Anne Patterson i en pressemeddelelse fra Cuil. Indtil videre er den danske funktionalitet begrænset, alt efter hvad man leder efter.

Source: http://www.computerworld.dk/art/47164?a=rss&i=0

PixelJunk Eden uses YouTube API for PS3 In-Game Recording

I wonder if there exists an integration/extension/tool between ArcGIS 9.x and YouTube. Publish your maps and animations by clicking the 'YouTube' button.

/Sik

Back in May we reported on a deal struck between Sony and Google to integrate the YouTube API into the PlayStation 3, allowing game developers to let players record videos during gameplay and upload them directly to YouTube. This week Google announced some fruits of that partnership: PS3 developer Q-Games is including recording and direct-upload capabilities in its upcoming PlayStation Network title PixelJunk Eden. On the official YouTube API blog YouTube Syndication Product Marketing Manager Christine Tsai wrote:

- For all you PixelJunk fans, you’ll now be able to capture video of your game recordings and upload directly to YouTube. … We look forward to the day when having YouTube upload support in games will be a standard feature.

Tsai also included an example of a PixelJunk Eden gameplay session recorded to YouTube (click to see the video):

Q-Games President Dylan Cuthbert also mentioned the feature in a post on the official PlayStation blog:

The YouTube upload feature is going to revolutionize how people share [gameplay] tips. Up until now it has been limited to people with video capture equipment, but from now on, anyone can record their game and upload it directly to YouTube from within the game! It is as simple as pushing one button to start and one button to stop and upload, and there is no affect on the gameplay thanks to the power of the PS3 and its abundance of SPU processors.

A demo of PixelJunk Eden is available on the PlayStation Network as of July 24, and the full game is slated for a July 31 release. Other games to take advantage of the YouTube API include Mainichi Issho (as of a May update) and Maxis’ upcoming Spore.

Source: http://blog.programmableweb.com/2008/07/29/pixeljunk-eden-uses-youtube-api-for-ps3-in-game-recording/

Announcing: Virtual Earth ASP.NET Control (CTP Release)

Announcing: Virtual Earth ASP.NET Control (CTP Release)

At long last it is FINALLY HERE. I’ve been talking about this control for a long time. We’ll we finally have the CTP release of the new Virtual Earth ASP.NET control. ASP.NET developers can now integrate Microsoft Virtual Earth Maps simply by dragging and dropping an ASP.NET Server Control in Visual Studio and Visual Web Developer.

Integrating interactive, immersive maps no longer requires JavaScript, it can be done by ASP.NET developers simply. For smooth interactions this control can be combined with ASP.NET AJAX capabilities to provide the power of ASP.NET Serverside processing without the development overhead of coding JavaScript. I’ll provide all the relevant links here at the top but you should check at the bottom to make sure you have all the prerequisites.

Key Links

- To download: http://dev.live.com/tools and download the Windows Live Tools July CTP release.

- For a Developer Evaluation account (to access Traffic): https://mappoint-css.live.com/mwssignup/ (requires Live ID)

- Channel 9 Screencast: http://channel9.msdn.com/posts/Mark+Brown/Virtual-Earth-ASPNET-Control-CTP-Release/

- To give feedback: https://connect.microsoft.com/feedback/default.aspx?SiteID=505

mandag den 28. juli 2008

Wanted: Your Take on the ESRI User Conference

Det ku' være man skulle skrive et par ord når man aligevel er der ...

Could be that one should write some words while being there ...

/Sik

Wanted: Your Take on the ESRI User Conference

Help us cover the largest GIS event in the United States and Win an iPod touch!

Here's How!

1) Attend the ESRI International User Conference and compose your thoughts on

- The opening session

- Hottest announcement

- Best third party offering

- Top trick you'll take home

- Technology trend you identified

- A tech or user session

- A co-located event (EdUC, Surveying and Engineering, Remote Sensing)

- Or another other topic that came up during your time in San Diego

2) Share your thoughts:

- Text: Send up to 300 words via text message or e-mail to editors@directionsmag.com. Include your name (first only is ok) and where you are from, as well as an e-mail address (not for publication).

- Audio: Call our comment hotline at 206-984-4409 (Why not store it in your cell phone now?) and leave a 2 minute message. Include your name (first name is ok) and where you are from, as well as an e-mail address not for publication).

- We'll take the best contributed audio and messages and include them (edited and credited) in our coverage in Directions Magazine, All Points Blog or the Directions Media Podcasts. We’ll be accepting submissions between Saturday August 2 and Wednesday August 13, 2008. We encourage you to submit during the event so you don’t forget that great tip, trick or thought!

3) Win:

- The Directions Media editorial staff will select the contributor of the best coverage to receive an iPod touch! All entries will be eligible for other prizes from Directions Media.

Hvor højt ligger min grund?

/Sik

Hvor højt ligger min grund?

Danmarks højder er blevet opmålt i en nøjagtighed der er blandt verdens bedste. Målingerne skal bl.a. bruges til miljøopgaver, kystsikring, anlægsopgaver og i forsvar, beredskab mv. Borgeren får også adgang til information om højden for en adresse eller matrikel fra september 2008.

"Vi har ved laserskanning af landet fået gennemført en opmåling af Danmarks højder i en nøjagtighed der er blandt de bedste i verden. Bl.a. fordi en mere nøjagtig højdemodel er nødvendig, for at vi i god tid kan tage aktion på miljø- og klimaændringer. Informationerne skal bruges til planlægning af kystsikring og til brug i anlægsarbejder mv., så vi kan sikre miljøet og os selv bedst muligt. Vi vil samtidig sikre, at informationerne om højder er til rådighed for borgeren. Også selv om vi godt ved, at højdeinformation ikke alene er nok til at vurdere risikoen for, at ens grund bliver oversvømmet . Diger, kystsikring, vandløb, kloakker, dræn, vejrforhold og meget andet har også betydning . Men borgeren bør have adgang til informationerne om højderne, i takt med at de bliver klar. Vi forventer at de første nye højdedata er tilgængelige for borgeren til september", siger vicedirektør Nikolaj Veje fra Kort & Matrikelstyrelsen.

"Der tilvejebringes mange data og informationer til professionel brug som har betydning for vores miljø og hverdag. Disse data og informationer bør være til rådighed for borgeren, så borgeren får bedre mulighed for selv at tage stilling og deltage i den offentlige debat om miljøets udvikling og konsekvens af fx klimaændringer. Vi lægger vægt på, at sådanne informationer hurtigt bliver tilgængelige, og at der oplyses om begrænsningerne i brugen af informationerne. Initiativet om at stille højdedata til rådighed for borgeren er et eksempel herpå. Jeg tror, at vi i fremtiden vil se mange eksempler på tilsvarende initiativer" siger vicedirektør Nikolaj Veje fra Kort & Matrikelstyrelsen

Find et sted med nye højder

Internet-tjenesten "Find et sted" på http://www.kms.dk/ vil forventeligt fra september kunne oplyse højden for en adresse eller matriklen for de første områder, som er klar. Alle områder forventes klar inden udgangen af året. De nye højdedata er navngivet Danmarks Højdemodel og offentliggøres via "Find et sted", www.kms.dk/Sepaakort/Digitale+kort/, omkring september 2008.

Source: http://www.kms.dk/Nyheder/Arkiv/2008/hojderogborgeren.htm

What is GIS for the Google generation?

It's no longer generation X but generation G.

/Sik

What is GIS for the Google generation?

Dr Pablo Mateos spoke (on behalf of a missing Alex Singleton) at the 2008 ESRC Research Methods Festival on "What is GIS for the Google generation?" The ESRC National Centre for Research Methods (NCRM) is a network of research groups, each conducting research and training in an area of social science research methods.The slides are here and it's tough to tell from them the exact nature of the discussion Still I tease out:

- need to distinguish GIS from today's visualization (GIS = science, much of the rest = visualization)

- today's users are part of a "volunteered" generation and users of social media

- need to "make sense of" the "points on a map" situation and explore metadata

There are some valuable (if uncited) stats and images.

Source: http://apb.directionsmag.com/archives/4570-What-is-GIS-for-the-Google-generation.html

Microsoft Moves Photosynth under Virtual Earth Team

If you haven't yet seen PhotoSynth you got to - it's a 'must see'. Together with Virtual Earth it's gonna be Great!

/Sik

Microsoft Moves Photosynth under Virtual Earth Team

From Chris Pendleton's Blog:

No longer is Photosynth just a Live Labs research project - it no(w) has full funding and backing from the Virtual Earth team. It helps that we get all of the people that worked on it too.

Recall that Photosynth can take a pile of photos of the same location/feature, "match" them up, and display the "photos in a reconstructed three-dimensional space, showing you how each one relates to the next." The general consensus, with which I agree: its closer to being a real world product. It's a good thing Chris mentioned this; the Photosynth blog's last post was Sept of last year! I guess they've been busy with other things.

Source: http://apb.directionsmag.com/archives/4565-Microsoft-Moves-Photosynth-under-Virtual-Earth-Team.html

5 Ajax Libraries to Enhance Your Mashup

Web 2.0 at its best

/Sik

5 Ajax Libraries to Enhance Your Mashup

Andres Ferrate, July 28th, 2008 Comments(0)

Earlier this year Google released its AJAX Libraries API as a content distribution network and loading architecture for some of the more popular open source JavaScript frameworks (our AJAX Libraries API Profile). According to Google:

The AJAX Libraries API takes the pain out of developing mashups in JavaScript while using a collection of libraries. We take the pain out of hosting the libraries, correctly setting cache headers, staying up to date with the most recent bug fixes, etc.

The API currently provides access to these open source libraries:

The scripts for these libraries can be accessed directly using a tag or via the Google AJAX API Loader’s google.load() method.

If you are a mashup developer that currently utilizes AJAX or if you are looking to utilize these frameworks, it is worth checking out this API, as it has good potential to streamline your development efforts while improving the user experience. Initially it may seem odd to link to these libraries via Google’s API when you can host these libraries locally on your web server, but once you delve into the rationale behind consolidation of these libraries you begin to realize that this approach has several benefits, including speed optimization and consistent versioning.

Yehuda Katz, one of the contributors to the AJAX Libraries API, has a great post on Ajaxian that gives more detail on the many benefits of using this approach, including:

- Caching can be done correctly, and once, by us… and developers have to do nothing

- Gzip works

- We can serve minified versions

- The files are hosted by Google which has a distributed CDN at various points around the world, so the files are “close” to the user

- The servers are fast

- By using the same URLs, if a critical mass of applications use the Google infrastructure, when someone comes to your application the file may already be loaded!

- A subtle performance (and security) issue revolves around the headers that you send up and down. Since you are using a special domain (NOTE: not google.com!), no cookies or other verbose headers will be sent up, saving precious bytes.

Not sure which library to use for your mashup? We have provided a brief roundup of the libraries below to give you an idea of some potential uses.

Read on: http://blog.programmableweb.com/2008/07/28/5-ajax-libraries-to-enhance-your-mashup/

Google Counts More Than 1 Trillion Unique Web URLs

Who was nummer one trillion ?

/Sik

Google Counts More Than 1 Trillion Unique Web URLs

´

– Juan Carlos Perez, IDG News Service

July 25, 2008

In a discovery that would probably send the Dr. Evil character of the "Austin Powers" movies into cardiac arrest, Google recently detected more than a trillion unique URLs on the Web.

This milestone awed Google search engineers, who are seeing the Web growing by several billion individual pages every day, company officials wrote in a blog post Friday.

In addition to announcing this finding, Google took the opportunity to promote the scope and magnitude of its index.

"We don't index every one of those trillion pages -- many of them are similar to each other, or represent auto-generated content ... that isn't very useful to searchers. But we're proud to have the most comprehensive index of any search engine, and our goal always has been to index all the world's data," wrote Jesse Alpert and Nissan Hajaj, software engineers in Google's Web Search Infrastructure Team.

It had been a while since Google had made public pronouncements about the size of its index, a topic that routinely generated controversy and counterclaims among the major search engine players years ago.

Those days of index-size envy ended when it became clear that most people rarely scan more than two pages of Web results. In other words, what matters is delivering 10 or 20 really relevant Web links, or, even better, a direct factual answer, because few people will wade through 5,000 results to find the desired information.

It will be interesting to see if this announcement from Google, posted on its main official blog, will trigger a round of reactions from rivals like Yahoo, Microsoft and Ask.com.

In the meantime, Google also disclosed interesting information about how and with what frequency it analyzes these links.

"Today, Google downloads the web continuously, collecting updated page information and re-processing the entire web-link graph several times per day. This graph of one trillion URLs is similar to a map made up of one trillion intersections. So multiple times every day, we do the computational equivalent of fully exploring every intersection of every road in the United States. Except it'd be a map about 50,000 times as big as the U.S., with 50,000 times as many roads and intersections," the officials wrote.

Copyright © 2008 IDG News Service. All rights reserved. IDG News Service is a trademark of International Data Group, Inc.

Source: http://www.cio.com/article/print/439495

The GeoWeb 2008

James Fee just come back from GeoWeb 2008.

/Sik

The GeoWeb 2008

July 27th, 2008 · 4 Comments · GIS

First off, I had an absolute blast. The city, the venue and the people have all been just wonderful to experience. Right now especially it is very interesting because the GeoWeb is finally being implemented in larger scales and we are beginning to see the results of those who work hard at trying to realize the promise of what the GeoWeb is. I think Ron Lake puts it best when he says the GeoWeb is the Internet, not some of little corner of it. If we think that, for the most part, the Internet can be used by anyone, anywhere, on any platform device. Simply put I can collaborate while sitting next to my pool in Tempe, AZ on my iPhone with colleagues using Linux workstations in Abu Dhabi sitting in high rise office buildings. The internet doesn’t care that I have a iPhone any more than it does that they have Firefox on Linux. The same is the case for the GeoWeb, my use of ESRI Servers should not limit someone using FOSS to access and use those services.

Now of course in practice it rarely works out that way. Most ESRI Server implementations doesn’t enable OGC standards even though ESRI has worked really hard at implementing them. And even FOSS servers don’t necessarily publish OGC formats that the GeoWeb wants to use. The technical limitations of the GeoWeb have been removed and now the problem is cultural. We need to start thinking about how these systems are going to come together and how we’ll be able to collaborate without having to all be on the same platform or language. People always use ESRI as an example of a company that is limiting the GeoWeb by not supporting OGC very well and they’ve probably earned that reputation. But to be fair, there are plenty of FOSS users who want to limit their products or services to only other FOSS systems. While ESRI’s might have been technical in nature (though I can see how people might have taken their stance as cultural), the limit of not allowing your products and services to be used by all because of some cultural or personal feelings about the spirit of Microsoft, Apple, ESRI, Oracle, etc is just as bad. Those who want to take part in this new open environment will grow quickly and leave those who put up artificial impediments to their participation will be left behind.

So what does this mean for those who want to see how they can take part in the GeoWeb. Well first off, make sure you are implementing solutions that aren’t closed. That doesn’t mean that you can’t use things such as Oracle, .NET or ESRI. Make sure those solutions offer up information and data in formats that people can use and build upon what you’ve done. I see great potential for government agencies that allow their data and information to be part of everything from mashups created by some neighborhood group to global companies who want to see new marketplaces and areas for expansion. This should be done through services, not FTP sites or zipped up shapefiles. I can’t be sure my applications are using the latest data if I have to manually browse an FTP site and somehow reconcile my data with yours. A simple service where I can subscribe to information is much simpler for all. Second, end users of the data should begin to recognize that their output shouldn’t be only paper map or even a PDF. KML, GML, GeoRSS and many of the other standards work very well when accompanying a paper or PDF map.

Making your data discoverable is also very important. That I would spend time making my data easily usable and not take the time to make it discoverable hurts my implementation. Making sure Google and Microsoft (assuming Live Search ever gets fixed) are crawling your information is critical to its acceptance. We will begin seeing spatial results start showing up in Google very soon and when that happens, those services will become extremely popular. If I’m the County of Maricopa, I don’t want my data hidden behind some old MapGuide Active X control, but as discoverable services that people can subscribe to and use. Think of it simply, if your data isn’t discoverable via Google search, someone else’s will and the parcel information that shows up will not be under your control. You can choose to ignore spatial search, but someone else is sure to step into your space and offer such services.

The time spend with everyone in Vancouver was well spent and I’ll continue to post what I saw an learned over this next week. Seeing real world implementation that take advantage of what the GeoWeb offers and seeing how successful those are, really validates the vision. It is still early enough in the process to be on the ground floor and there are still huge hurdles as far as data standards and security that need to be addressed so getting involved now can only help everyone. The idea that 10 people can use 10 different software packages and collaborate on geospatial products is very powerful. And of course the added benefit is that you can choose the software platform that best meets your needs and not worry about matching your clients or consumers platforms. That saves everyone time and money, just like the Internet itself has done.

Source: http://www.spatiallyadjusted.com/2008/07/27/the-geoweb-2008/

Plenary to Showcase Geography in Action Today, The Future of GIS

Next mondays program at the ESRI International Conference in San Diego

/Sik

Plenary to Showcase Geography in Action Today, The Future of GIS

The 2008 ESRI UC promises to be the best to date, with this year's theme, GIS: Geography in Action, a major focus of the first day's activities. Featuring the latest innovations in ArcGIS 9.3, user successes, and a glimpse into the future of GIS, the Monday Plenary Session will provide you a rich learning experience.

ESRI President Jack Dangermond will host the day's events, starting with a welcome and a chance to introduce yourself to fellow attendees. One of the highlights every year is when Jack acknowledges many examples of users' work. Several user-focused awards, including the Making A Difference and President's Award, will be presented.

After celebrating the accomplishments of GIS users, the session will focus on Jack's vision for GIS in action. You'll see the latest advancements in ArcGIS 9.3 that will help make you more productive including new capabilities for connecting ArcGIS Desktop and Server to the GeoWeb.

The afternoon session will look toward the future of GIS, our profession, and our planet. Jack will be joined by ESRI staff to give you an exclusive glimpse into the future of ArcGIS. They will showcase the newest GIS research and development using the ESRI software and solutions suite.

The always amazing K-12 Education special presentation will feature the application of geography and GIS by today's youth. We'll honor both students and educators doing terrific GIS work in the classroom.

This year's keynote address will be given by renowned botanist, environmentalist, biodiversity expert, and director of the Missouri Botanical Garden, Dr. Peter Raven. Dr. Raven will discuss the significance of plant life and the environment in sustaining our world, as well as the threats ecosystems face today. Learn about the numerous threats, such as the destruction of natural habitats, over-consumption, and climate change, that are affecting biodiversity. Dr. Raven will also discuss how we can help preserve and improve our planet's sustainability.

The first day of the conference wraps up with the Map Gallery Opening and Welcome Reception, where you can view a myriad of examples of GIS users making a difference using geography in action. The reception begins immediately following the Plenary Session.

Source: http://blogs.esri.com/Info/blogs/ucblog/archive/2008/07/27/plenary-to-showcase-geography-in-action-today-the-future-of-gis.aspx

lørdag den 26. juli 2008

GIS Day 2008 Promotional Flier

Be part of the international GIS day the 19th October!

/Sik

GIS Day 2008 Promotional Flier

The new GIS Day 2008 flier is now available! GIS Day is fast approaching on November 19th and the new flier provides both information and inspiration for celebrating your event. This colorful flier encourages educators, professionals, and students alike to participate in this global event. Download the flier to help you get the word out about GIS Day.

ESRI International User Conference 2008

ESRI is the biggest player on the GIS market globally and that has not gone unnoticed by software giants Google and Microsoft. Both companies has used a lot of time publically to announce this and that strategic partnership with the 'little' American company in Redlands. ESRI has not officially favoured any of them. It is however not any secret that Microsoft has played a major role in the development of the ArcGIS server technology. It is going to be very interessting to see what the future might bring but hopefully ESRI will manage to stay independent and not part of any of the before mentioned.

/Sik

Virtual Earth, An Evangelist's Blog

Awwwwe yeah, I’m coming home San Diego! I love a free trip home. Microsoft Virtual Earth will have a significant presence at the ESRI International User Conference in San Diego August 4 - 8, 2008. We’ve had some strategic announcements with ESRI this year and with the integration of Virtual Earth and ArcGIS Server things just got interesting. At the very least, we’ll have a booth at the show and I’ll be working the first 2 days (others will work all week), so if you want to chew the fat stop by and let’s talk shop. Since I know at least one person will ask, yes, Roger Mall (who has spearheaded our the Microsoft / ESRI and many other partner relationships) will be there for the whole show.

There’s already one session (actually a pre-conference seminar) set in stone, but with the unpredictability of the future you never know what’s going to happen between now and then. The current seminar is titled, "Rapid Web Development using ArcGIS Server JavaScript and REST API" and features the ArcGIS JavaScript Extender for Virtual Earth. It’s $325 and it’s on August 3, so you might want to get to the conference a few days early. Dude, it’s in San Diego - how money is it that you can tell your boss you HAVE TO go to San Diego a couple days early for training.

After the seminar, you should be able to make cool applications like this integration of Virtual Earth and ArcGIS Drive Time Polygons. This is a sick little app (free download) that renders drive time zones from any point clicked on the map. The shot below outlines the drive distance you would drive in 1 minute (gray, covered with pins), 5 minutes (orange) and 10 minutes (pink) from the center of the map. The query also hits the Live Search local listings using the VEMap.Find method in the Virtual Earth API and populating the "what" argument with any keyword (burrito shops in this case). If you don’t code and want to see this in action, you can view it from my Live Skydrive site.